Thread-Safe Classes: GCD vs Actors

Old problem - modern solution

Updated 30.10.2025: UnfairLock info added

This time, I want to focus more on a pure coding task — and what can be more intriguing than multithreading, data races, and concurrency? Moreover, these are common and catchy topics in tech interviews. Such themes are always an inspiration for me because they’re educational, useful, and often highlight areas you’d never expect to be problematic.

Our question is:

You have a pretty plain class that stores cached values in a dictionary. Add whatever you think can bring this class to thread-safe version and, if possible, make it performant.

Non-Safe Class

Let’s start with the simplest form — a shared class with a dictionary:

final class Cache {

private var cache = [String: Any]()

func get(_ key: String) -> Any? {

cache[key]

}

func set(_ key: String, value: Any) {

cache[key] = value

}

}This class looks fine, but it’s not thread-safe.

If two threads call set() and get() simultaneously, the dictionary ([String: Any]) may be mutated while being read — leading to data races, crashes, or corrupted state.

❌ Race condition example

Thread A is writing

cache[“id”] = valueThread B is reading

cache[”id”]Both access the same memory region without synchronization → undefined behavior.

Thread-Safe Cache (Concurrent Queue, No Barrier)

To fix this, we can protect access to cache with a concurrent queue and synchronous reads:

final class ThreadSafeCache {

private var cache = [String: Any]()

private let queue = DispatchQueue(label: “cache”, attributes: .concurrent)

func get(_ key: String) -> Any? {

queue.sync {

cache[key]

}

}

func set(_ key: String, value: Any) {

queue.async {

self.cache[key] = value

}

}

}✅ What’s fixed?

All access happens through the queue, so no direct concurrent mutation of the dictionary

Reads (sync)

Writes (async) — though not yet serialized

⚠️ What’s not ideal?

Without .barrier, reads and writes may overlap.

So while we’re protected from crashes, we may still see inconsistent states (e.g., read an old value while a write is halfway done).

⚙️ Could set be .sync?

Technically, yes — you could write:

func set(_ key: String, value: Any) {

queue.sync {

self.cache[key] = value

}

}and it would work correctly in terms of thread safety:

sync ensures that the write happens before returning

cache won’t be accessed concurrently by another sync or async call on the same queue

BUT — the real difference is in behavior and risk.

Let’s recap a little about what’s the main difference between sync and async. The difference between them lies in how they handle execution and blocking. A sync call runs synchronously, meaning the current thread waits until the operation is complete before continuing. It is blocking, and no other code after the call runs until the block finishes. This makes it predictable but also potentially dangerous if used incorrectly, for example, when calling sync on the same queue that is already executing work, which can cause a deadlock.

An async call runs asynchronously, meaning the operation is scheduled to run later, and the current thread continues immediately without waiting. It is non-blocking, allowing other work to proceed while the queued task executes in the background. This improves responsiveness and concurrency but requires additional coordination if you need to know when the task completes.

In short, sync waits for the task to finish, while async schedules the task and returns immediately. Let’s dive closely!

🛑 Why .sync on concurrent queue is risky?

Using .sync on a concurrent queue is fine from another thread, but dangerous if called from the same queue.

Example:

queue.sync {

set(”x”, value: 1) // calls queue.sync again inside!

}This would cause a deadlock, because .sync waits for the block to finish — but that block can’t start until the first one finishes (it’s the same queue).

💥 So, if there’s any chance your set could be called from within another block on queue, you’ll hang your program.

🚦 Why .async is the safer pattern

Using .async avoids this risk:

It never blocks the caller

It simply schedules the write on the queue

It guarantees safe execution order without deadlock potential

That’s why .async is the recommended GCD pattern for writes — especially when using .concurrent queues or when you don’t control all call sites.

Concurrent Reads, Serialized Writes (Barrier)

The best-practice pattern is called

Concurrent Reads, Serialized Writes

We use .barrier to ensure that writes execute exclusively, blocking concurrent reads and other writes while updating shared state.

final class ThreadSafeCache {

private var cache = [String: Any]()

private let queue = DispatchQueue(label: “cache”, attributes: .concurrent)

func get(_ key: String) -> Any? {

queue.sync {

cache[key]

}

}

func set(_ key: String, value: Any) {

queue.async(flags: .barrier) {

self.cache[key] = value

}

}

}

//or Migrated Swift Concurrency version

final class ThreadSafeCache: @unchecked Sendable {

private var cache: [String: Sendable] = [:]

private let queue = DispatchQueue(label: “cache”, attributes: .concurrent)

func get(_ key: String) -> Sendable? {

queue.sync {

cache[key]

}

}

func set(_ key: String, value: Sendable) {

queue.async(flags: .barrier) {

self.cache[key] = value

}

}

}✅ What’s improved?

Reads: Can run concurrently.

Writes: Wait until all reads complete, then execute exclusively.

No races, no inconsistent reads.

Barriers only work with

concurrentQueues. On serial queue it acts like async call.

OSAllocatedUnfairLock

Introduced in iOS 16 / macOS 13, OSAllocatedUnfairLock is a modern Swift wrapper around os_unfair_lock with proper memory management and Sendable safety.

Apple Recommendation: If you’ve existing Swift code that uses

os_unfair_lock, change it to useOSAllocatedUnfairLockto ensure correct locking behavior.

It lives in the os module, and acts not like value type despite being it (struct).

Example:

import os

final class ModernUnfairLockCache {

private var cache = [String: Any]()

private let lock = OSAllocatedUnfairLock()

func get(_ key: String) -> Any? {

lock.withLock {

cache[key]

}

}

func set(_ key: String, value: Any) {

lock.withLock {

cache[key] = value

}

}

}It has few remarkable advantages (not even few): OSAllocatedUnfairLock provides a Swift-native API that is both type-safe and memory-managed, so you don’t need to deal with raw pointers or manual initialization. It includes a convenient withLock { ... } method that automatically locks and unlocks around a critical section, removing the risk of forgetting to release the lock. The lock is ARC-safe, with its lifetime managed automatically by Swift, so no manual memory management is required. It is thread-safe across tasks and fully Sendable-conforming, making it safe to use in Swift Concurrency environments. In terms of performance, it uses the same underlying kernel primitive as os_unfair_lock, providing identical speed and efficiency. Unlike its C-based predecessor, it requires no C interoperability — the API is fully Swift-native with clean, expressive syntax. Finally, it is safer to use, preventing undefined behavior that could occur when moving or copying the lock in memory.

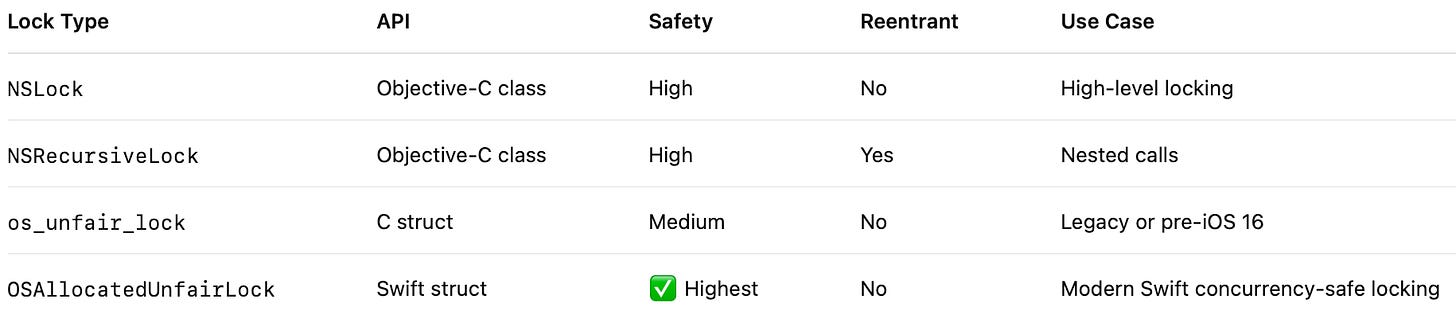

Oh I wish Substack had a Tables and this is a comparison table of Locks we might use in modern Swift:

Swift Actor Version (Full Concurrency Safety)

With Swift Concurrency, we can eliminate queues and barriers entirely. Actors provide isolation: only one task can access actor-isolated state at a time.

actor ThreadSafeCache {

private var cache = [String: Sendable]()

func get(_ key: String) -> Sendable? {

cache[key]

}

func set(_ key: String, value: Sendable) {

cache[key] = value

}

}✅ What actors guarantee

cache is isolated — no two tasks can access it simultaneously.

All calls are serialized by Swift’s concurrency runtime

You can safely call from multiple async contexts:

let cache = SafeCache()

Task {

await cache.set(”id”, value: 42)

}

Task {

print(await cache.get(”id”) ?? “nil”)

}No explicit locks, queues, or barriers — the compiler and runtime handle synchronization.

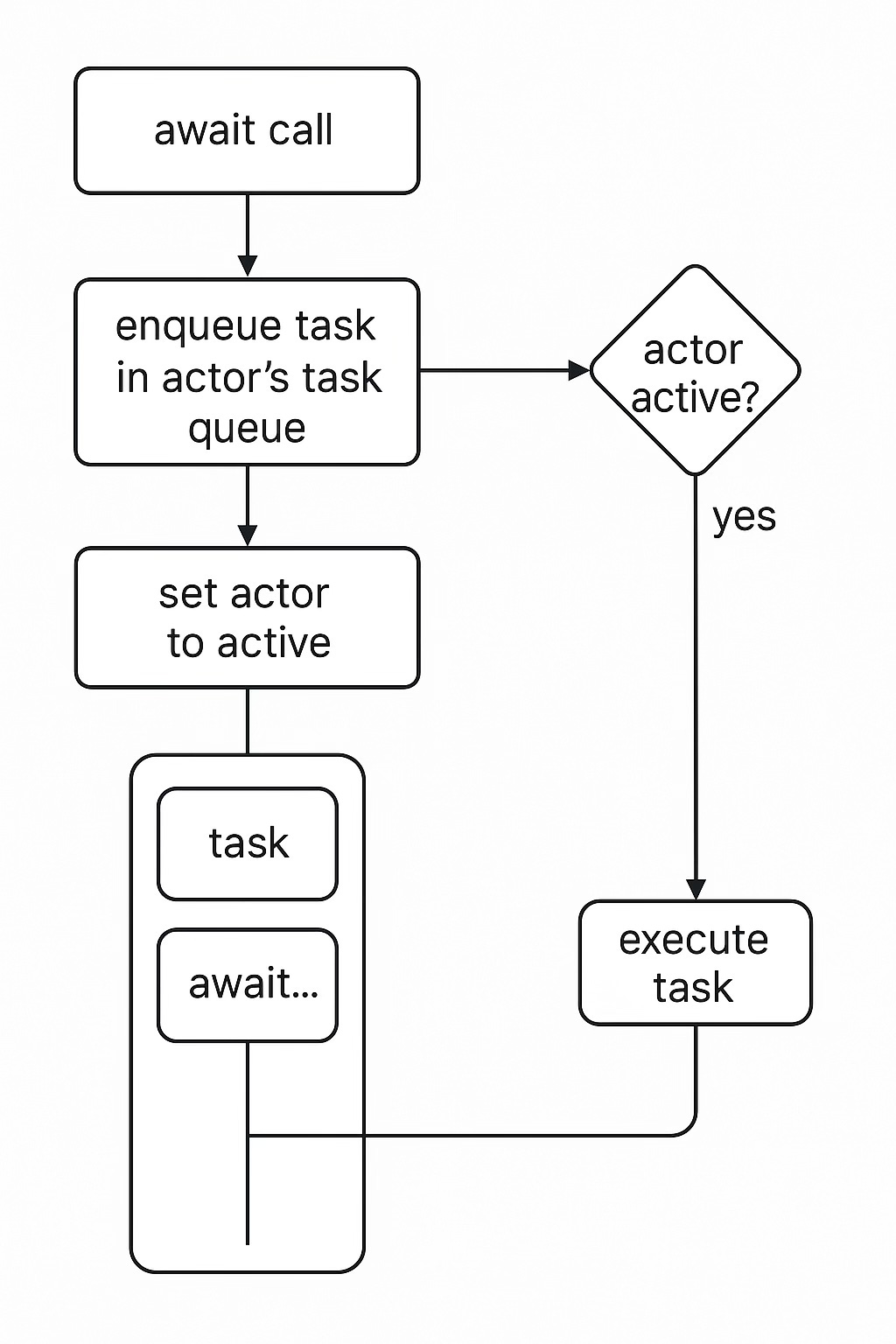

🔬 Under the Hood: How Actors Work

Actors internally manage a queue of pending tasks (jobs) and an isActive flag. When a task calls an actor method, Swift enqueues it to the actor’s executor. When idle, the actor becomes active, runs one job at a time, and processes its queue sequentially.

This simplified diagram shows how actor takes a task from inner queue to execute. And all of them are serial.

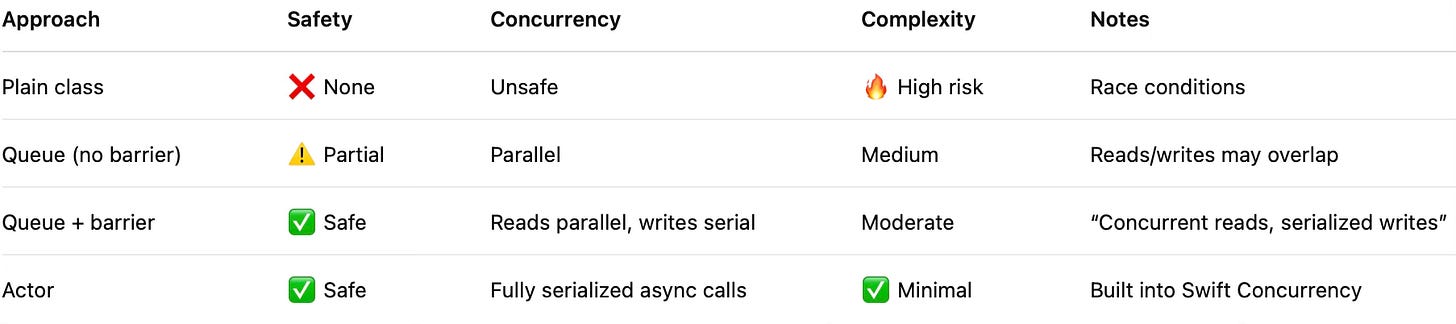

Summary

Below you can find a small table comparing all the described solutions by their main points. If you want performance and safety, use .barrier. For modern approaches and full compatibility with Swift 6.2, actors are the way to go.

Apple Documentation

DispatchQueue — Official API reference

https://developer.apple.com/documentation/dispatch/dispatchqueue

Dispatch Barrier — Explanation of

.barriersemantics (works only with concurrent queues)https://developer.apple.com/documentation/dispatch/dispatch-barrier

dispatch_barrier_async — Function-level reference with behavioral details

https://developer.apple.com/documentation/dispatch/1452797-dispatch_barrier_async

DispatchQueue.Attributes — Includes concurrent and notes on respecting barriers

https://developer.apple.com/documentation/dispatch/dispatchqueue/attributes

Swift Language Guide: Concurrency — Covers actor, async/await, and structured concurrency

https://docs.swift.org/swift-book/documentation/the-swift-programming-language/concurrency

Swift Evolution SE-0306: Actors — Official design proposal for Swift Actors

https://github.com/apple/swift-evolution/blob/main/proposals/0306-actors.md

WWDC 2017 — Modernizing Grand Central Dispatch Usage (Session 706)

oSAllocatedUnfairLock

Please share a working code example with two threads, each writing at the same time to the same instance’s cache variable, causing the app to crash, which was used to write the section: ❌ Race condition example and crash